For 4 days, the c-root server maintained by Cogent lost touch with its 12 peers.

For more than four days, a server at the very core of the Internet’s domain name system was out of sync with its 12 root server peers due to an unexplained glitch that could have caused stability and security problems worldwide. This server, maintained by Internet carrier Cogent Communications, is one of the 13 root servers that provision the Internet’s root zone, which sits at the top of the hierarchical distributed database known as the domain name system, or DNS.

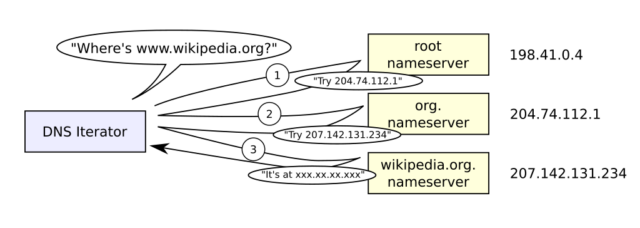

When someone enters wikipedia.org in their browser, the servers handling the request first must translate the human-friendly domain name into an IP address. This is where the domain name system comes in. The first step in the DNS process is the browser queries the local stub resolver in the local operating system. The stub resolver forwards the query to a recursive resolver, which may be provided by the user’s ISP or a service such as 1.1.1.1 or 8.8.8.8 from Cloudflare and Google, respectively.

If it needs to, the recursive resolver contacts the c-root server or one of its 12 peers to determine the authoritative name server for the .org top level domain. The .org name server then refers the request to the Wikipedia name server, which then returns the IP address. In the following diagram, the recursive server is labeled “iterator.”

Given the crucial role a root server provides in ensuring one device can find any other device on the Internet, there are 13 of them geographically dispersed all over the world. Each root sever is, in fact, a cluster of servers that are also geographically dispersed, providing even more redundancy. Normally, the 13 root servers—each operated by a different entity—march in lockstep. When a change is made to the contents they host, it generally occurs on all of them within a few seconds or minutes at most.

Strange events at the C-root name server

This tight synchronization is crucial for ensuring stability. If one root server directs traffic lookups to one intermediate server and another root server sends lookups to a different intermediate server, the Internet as we know it could collapse. More important still, root servers store the cryptographic keys necessary to authenticate some of intermediate servers under a mechanism known as DNSSEC. If keys aren’t identical across all 13 root servers, there’s an increased risk of attacks such as DNS cache poisoning.

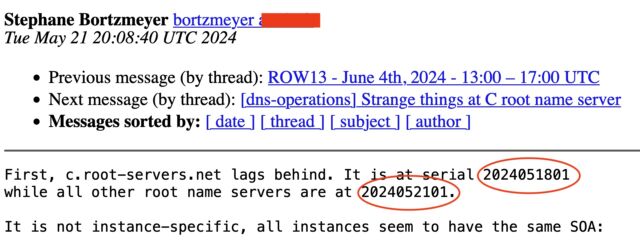

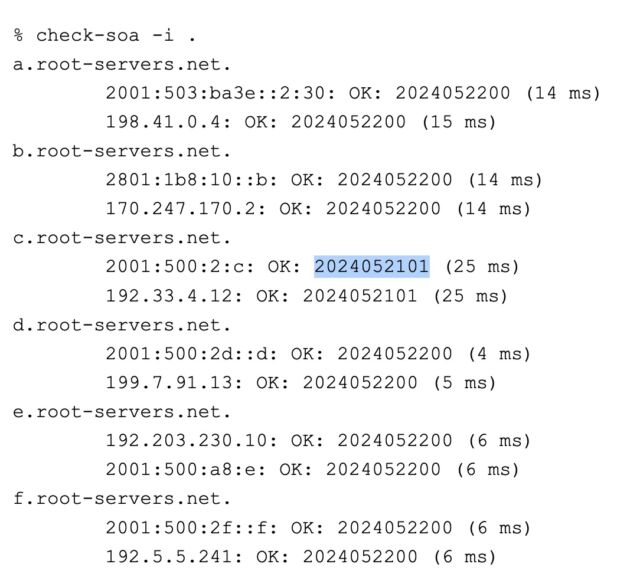

For reasons that remain unclear outside of Cogent—which declined to comment for this post—all 12 instances of the c-root it’s responsible for maintaining suddenly stopped updating on Saturday. Stéphane Bortzmeyer, a French engineer who was among the first to flag the problem in a Tuesday post, noted then that the c-root was three days behind the rest of the root servers.

The lag was further noted on Mastodon.https://fosstodon.org/@bert_hubert/112480485891997247/embed

Optional caption

By mid-day Wednesday, the lag was shortened to about one day.

By late Wednesday, the c-root was finally up to date.

ARS VIDEO

How Scientists Respond to Science Deniers

The lag prompted engineers to delay work that had been scheduled for this week on the name servers that handle lookups for domain names ending in .gov and .int. The plan had been to update the servers’ DNSSEC to use ECDSA cryptographic keys. Without the ability for the new keys to be rolled out uniformly to all root servers, those plans had to be scrubbed.

“We are fully aware and monitoring the situation around the C-root servers and will not proceed with the ongoing DNSSEC algorithm roll until it has stabilized,” Christian Elmerot, a Cloudflare engineer in charge of the .gov DNSSEC transition, announced Wednesday. Engineers planning the transition to the .int server made the same call, but only after some people worried the work would proceed despite the problem.

In an interview, Elmerot said the real-world effects of the update problems were minimal, but over time, the impact would have grown. He explained:

As changes accumulate in the root zone, the difference between the versions begin to matter more. Typical changes in the root zone are changing delegations (NS records), rotating DS records for DNSSEC updates and with all this comes updates to DNSSEC signatures. If the differences remain, then the outdated root server will see the DNSSEC signatures expire and that will begin to have more marked consequences. Using more than one root server lowers the chance that a resolver uses the lagging root server.

The misperforming c-root coincided with another glitch that prevented many people from reaching the c-root website, which is also maintained by Cogent. Many assumed that the cause for both problems was the same. It later turned out that the site problems were the result of Cogent transferring the IP address used to host the site to Orange Ivory Coast, an African subsidiary of French telecom Orange.Advertisement

The mixup prompted Bortzmeyer to joke, “Reported to Cogent (ticket HD303751898) but they do not seem to understand that they manage a root name server.”

The errors come as Cogent has in recent months terminated relationships with several carriers to exchange each other’s traffic under an arrangement known as peering. The most recent termination occurred last Friday, when Cogent partially ”depeered” Indian carrier Tata Communications, a move that made many Tata-hosted sites in the Asia Pacific region unreachable to Cogent customers.

Late Wednesday, Cogent published the following statement, indicating it wasn’t aware of the glitch until Tuesday, and it took another 25 hours to fix it:

On May 21 at 15:30 UTC the c-root team at Cogent Communications was informed that the root zone as served by c-root had ceased to track changes from the root zone publication server after May 18. Analysis showed this to have been caused by an unrelated routing policy change whose side effect was to silence the relevant monitoring systems. No production DNS queries went unanswered by c-root as a result of this outage, and the only impact was on root zone freshness. Root zone freshness as served by c-root was fully restored on May 22 at 16:00 UTC.

Initially, some people speculated that the depeering of Tata Communications, the c-root site outage, and the update errors to the c-root itself were all connected somehow. Given the vagueness of the statement, the relation of those events still isn’t entirely clear.