With half the world’s population invited to vote this year, and fears mounting over deepfakes and other fabricated narratives being circulated to sway elections, now is probably a good time to be running a digital watermarking company.

“I’ll say this,” Eric Wengrowski, founder of Steg.AI, tells me. “When you’re in the watermarking business and the president of the United States is mentioning multiple times in White House briefings that we need watermarking, that’s a good place to be.”

Once a term restricted largely to the insertion of an image into a bank note or bill to prove its authenticity as legal tender, watermarking is likely to become ubiquitous online as it plays an increasingly pivotal role in the fight to discern fact from falsehood on the internet.

“There’s been a surge in activity around watermarking in Congress, some of the legislation that’s being passed in the United States to support watermarking technology development, particularly for information security applications,” Wengrowski says.

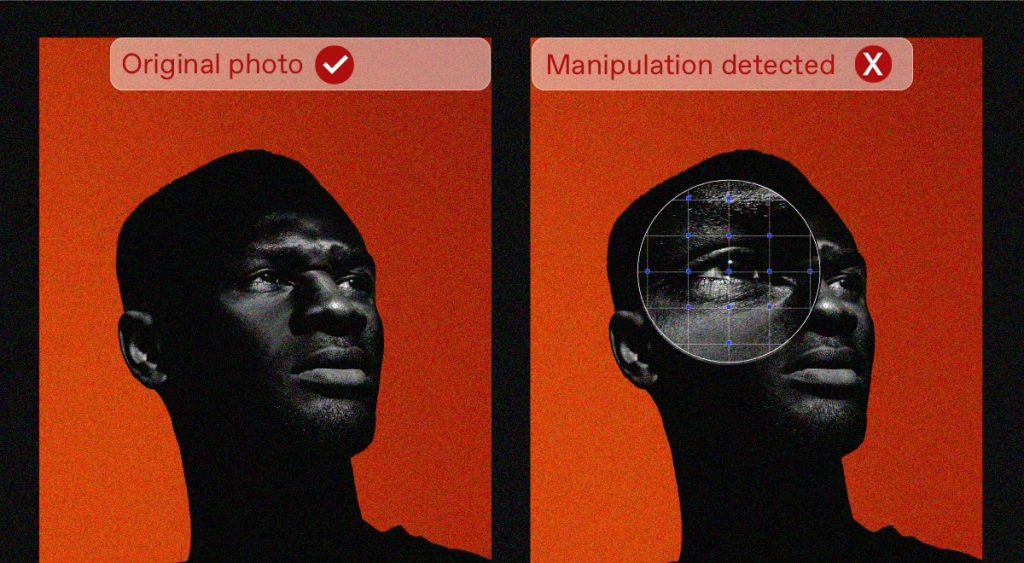

This is a good development for his company, Steg.AI, which specializes in a technique known as “light field messaging.” This entails loading a digital image or sound recording with special data not immediately visible or audible – but which renders the subject resistant to be doctored or copied.

“Light field messaging allows us to embed a lot of information, and it can be very resistant to edits or any kind of manipulation,” he explains. “And it looks really good, you know, virtually indistinguishable – so it’s really difficult to tell that it’s there. Ultimately, what it means is that we’re able to add a new channel of information to digital content.”

This facility, he suggests, has a lot of useful applications – not least of all providing a means of establishing content authenticity in an era of burgeoning deepfakes and generative AI.

In case you’ve been vacationing on Mars over the past couple of years, here is the situation in a nutshell. The seismic shifts caused by OpenAI’s automated content generator ChatGPT are set to intertwine with an historic year in politics that will see four billion people asked to elect their leaders. This at a time when jurisdictions as diverse as the US, Russia, Ukraine, Israel, Gaza, and China are caught up in what many see as existential struggles of one form or another – with potentially devastating consequences for the losers.

Machine-generated fakes swaying the outcome of an otherwise democratic election in 2024? You could say it’s kind of a big deal.

Don’t jump to conclusions

Perhaps that’s why Wengrowski seems a bit hesitant about nailing his colors to the mast when it comes to billing Steg.AI as a company that calls out deepfakes for a living.

“Just to be very clear, we’re not in the business of looking at photos or videos or anything like that and telling you this is definitely real and something that you can trust,” he stresses. “Instead, what we’re doing is establishing and verifying provenance – seeing where content has come from.”

He continues: “I’m looking at an image. Where did it originate from? A camera sensor or an algorithm? If it did come out of an algorithm, whose algorithm? What did they do with it? If it came out of the camera sensor, how has it been edited? Has it been touched with AI in any way before getting to your screen? It’s not really a good idea at all to try and look at content and decide, hey, is this a deepfake or is this original.”

The reason for this, he says, is that doctored content is not always used for malicious or harmful purposes, whereas pictures – or for that matter quotes – can often be taken out of context and used to distort a narrative.

“Just because something is a deepfake or synthetic content doesn’t mean it’s bad,” says Wengrowski. “We work with companies who use generative AI for translation services, professional videos, training, stuff like that. There’s nothing malicious about this content. And similarly, just because something came out of the camera doesn’t mean it’s good. It could still be incredibly misleading. I mean, that’s what movies are, right?”

But still, with private-sector organizations anxious to hallmark their digital IP and state actors likewise keen to ensure their reputations are not abused by the spread of deepfakes, business must surely be booming for watermarking.

A tool developed by Steg with fellow AI companies Truepic and HuggingFace that works off a Stable Diffusion text-to-image model allows prospective clients to test out watermarking software for themselves.

“These generative AI and deepfake algorithms will continue to get better,” he says. “The problem of distinguishing – this is definitely AI-generated versus this is not – the gap is getting smaller and smaller. We’re providing an opt-in service for our customers, so that they can mark their content and say: this is ours. They can do it in a way that’s public so that others can see the origin of content, or they can do it in a way that’s private, so they can track their assets and see how they’re being used.”https://open.spotify.com/embed/episode/48ooUnaOK5J6LIbhvbkgCA?utm_source=generator

A future essential public service?

I put it to Wengrowski that one day he might find Steg.AI going in directions he could not have foreseen – what if the need to verify provenance becomes so pressing that the public is forced to depend on a free-at-point-of-use service, curated by the government and paid for by taxes, but provided by companies such as his?

“Oh, absolutely,” he replies when I ask him if he’d be receptive to the idea. “The technology is there, it’s more about the will to get the business model for something like this – make sure it really works in the way it needs to, for whoever the intended users are, and especially if it’s the public. I love the idea of providing a service to help people make decisions based on facts.”

Citing fact-checking websites Snopes and Politifact as examples, he adds: “There’s already versions of this for news content in general. All these great journalistic services that say what’s real, what’s not – especially in the political realm. It’s only fair that technology is used responsibly. For a democracy to work, we need trust, facts, a very well-informed public. Technology like ours is a great tool for providing that.”

Of course, only time will tell how big a role audio-visual content plays in the disinformation wars around elections – and therefore how big a problem it proves to be.

“Humans are super-visual creatures, right?” says Wengrowski. “It’s really how we interpret the world. We’ve been surprised to see that a forensic watermarking solution like Steg provides has massive appeal. Industries from deepfakes to online magazines to consumer electronics – for us as a company, one of the challenges is focusing on the most important markets to go after, where there is the biggest need.”

Don’t expect a panacea

That said, he doesn’t think his technology will be a cure-all solution for the problem of disinformation in the run-up to multiple elections this year, it’s simply too soon to expect that kind of result.

“It’s very validating to see the rest of the world catch on, that this is a really attractive solution to the threat of misinformation,” he says, but adds: “Forensic watermarks, at least at first, probably aren’t going to stop the most malicious actors from creating harmful content.”

However, he does think it will be a useful tool to prevent material with initially benign intentions from being misused by bad actors – simply by allowing such content to be identifiable as genuine in the first place, and therefore engendering an online culture where people are encouraged to look before they leap.

“It’s going to be a really big deal for the good guys,” he says, “who want to create content, either in a camera or using an algorithm, and say: ‘No, I’m being open and transparent, this is real, this is where this came from, you can check yourself.’”

He adds: “Part of the adjustment is going to be getting the public and social media platforms and all the other places where people are to get in the habit of saying: ‘OK, well, this thing looks real, but seeing is not believing anymore – let me really look and see where this is coming from.’ And make my decision that way as to how much I trust this piece of content.”