A new GPU generation did very little to change the speed you get for your money.

In many ways, 2023 was a long-awaited return to normalcy for people who build their own gaming and/or workstation PCs. For the entire year, most mainstream components have been available at or a little under their official retail prices, making it possible to build all kinds of PCs at relatively reasonable prices without worrying about restocks or waiting for discounts. It was a welcome continuation of some GPU trends that started in 2022. Nvidia, AMD, and Intel could release a new GPU, and you could consistently buy that GPU for roughly what it was supposed to cost.

That’s where we get into how frustrating 2023 was for GPU buyers, though. Cards like the GeForce RTX 4090 and Radeon RX 7900 series launched in late 2022 and boosted performance beyond what any last-generation cards could achieve. But 2023’s midrange GPU launches were less ambitious. Not only did they offer the performance of a last-generation GPU, but most of them did it for around the same price as the last-gen GPUs whose performance they matched.

The midrange runs in place

Not every midrange GPU launch will get us a GTX 1060—a card roughly 50 percent faster than its immediate predecessor and beat the previous-generation GTX 980 despite costing just a bit over half as much money. But even if your expectations were low, this year’s midrange GPU launches have been underwhelming.

The worst was probably the GeForce RTX 4060 Ti, which sometimes struggled to beat the card it replaced at around the same price. The 16GB version of the card was particularly maligned since it was $100 more expensive but was only faster than the 8GB version in a handful of games.

The regular RTX 4060 was slightly better news, thanks partly to a $30 price drop from where the RTX 3060 started. The performance gains were small, and a drop from 12GB to 8GB of RAM isn’t the direction we prefer to see things move, but it was still a slightly faster and more efficient card at around the same price. AMD’s Radeon RX 7600, RX 7700 XT, and RX 7800 XT all belong in this same broad category—some improvements, but generally similar performance to previous-generation parts at similar or slightly lower prices. Not an exciting leap for people with aging GPUs who waited out the GPU shortage to get an upgrade.Advertisement

The best midrange card of the generation—and at $600, we’re definitely stretching the definition of “midrange”—might be the GeForce RTX 4070, which can generally match or slightly beat the RTX 3080 while using much less power and costing $100 less than the RTX 3080’s suggested retail price. That seems like a solid deal once you consider that the RTX 3080 was essentially unavailable at its suggested retail price for most of its life span. But $600 is still a $100 increase from the 2070 and a $220 increase from the 1070, making it tougher to swallow.

In all, 2023 wasn’t the worst time to buy a $300 GPU; that dubious honor belongs to the depths of 2021, when you’d be lucky to snag a GTX 1650 for that price. But “consistently available, basically competent GPUs” are harder to be thankful for the further we get from the GPU shortage.

Marketing gets more misleading

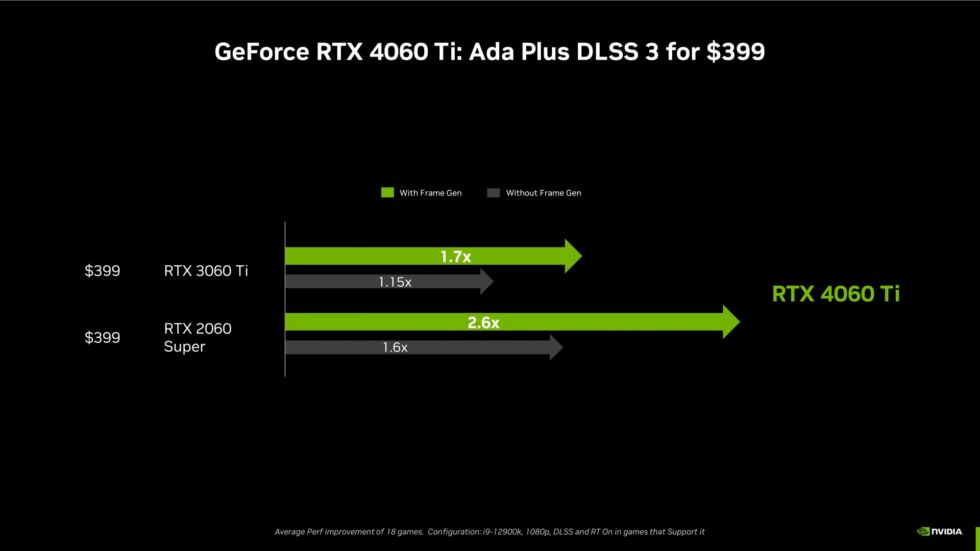

If you just looked at Nvidia’s early performance claims for each of these GPUs, you might think that the RTX 40-series was an exciting jump forward.

But these numbers were only possible in games that supported these GPUs’ newest software gimmick, DLSS Frame Generation (FG). The original DLSS and DLSS 2 improve performance by upsampling the images generated by your GPU, generating interpolated pixels that make lower-res image into higher-res ones without the blurriness and loss of image quality you’d get from simple upscaling. DLSS FG generates entire frames in between the ones being rendered by your GPU, theoretically providing big frame rate boosts without requiring a powerful GPU.

FURTHER READING

AMD’s FPS-doubling FSR 3 is coming soon, and not just to Radeon graphics cards

The technology is impressive when it works, and it’s been successful enough to spawn hardware-agnostic imitators like the AMD-backed FSR 3 and an alternate implementation from Intel that’s still in early stages. But it has notable limitations—mainly, it needs a reasonably high base frame rate to have enough data to generate convincing extra frames, something that these midrange cards may struggle to do. Even when performance is good, it can introduce weird visual artifacts or lose fine detail. The technology isn’t available in all games. And DLSS FG also adds a bit of latency, though this can be offset with latency-reducing technologies like Nvidia Reflex.

As another tool in the performance-enhancing toolbox, DLSS FG is nice to have. But to put it front-and-center in comparisons with previous-generation graphics cards is, at best, painting an overly rosy picture of what upgraders can actually expect.

ARS VIDEO

How The Callisto Protocol’s Team Designed Its Terrifying, Immersive Audio

Intel plays catch-up

Intel Arc’s first year on the market has cemented its status as “a good first try.” It could have gone either way based on last year’s launch, which was hampered by buggy drivers and inconsistent performance, especially in older games.

FURTHER READING

New Intel GPU drivers help address one of Arc’s biggest remaining weak points

But to its credit, Intel significantly improved its software in the last year, eliminating bugs, fixing annoying problems, and boosting performance in older games. The company has made the most progress on some older DirectX 9 and DirectX 11 games, thanks at least in part to code translation technologies that allow those APIs to run on top of DirectX 12 and/or Vulkan, newer low-level graphics APIs that Arc GPUs are better at handling.

Intel has also remained relatively competitive on price, thanks partly to Nvidia and AMD’s aforementioned underwhelming midrange GPU launches. The Arc A750 is consistently available for $200 or a bit less, making it a solid value for TK

But will Intel stay committed to the GPU market? At this point, the company is still moving ahead with its current road map, which should get us next-gen “Battlemage” GPUs at some point in 2024. Arc technology and branding has also made its way into Intel’s latest integrated GPUs.

But current Arc GPUs are thoroughly outmatched in performance and power efficiency by Nvidia and AMD’s offerings, locking Intel out of the $300-plus GPU market. In the last year, the graphics division has seen some leadership shake-ups, and Intel seems particularly eager to shed unprofitable side hustles (like its crypto-mining chips or the NUC mini desktops) as it tries to improve its financial position. Arc cards have yet to break out of the “other” category in the Steam Hardware Survey (though, to be fair, most of AMD’s RX 7000 GPUs haven’t either).

It’s hard to imagine that Intel will keep plowing the resources into developing and marketing new GPUs if it seems like it will be stuck selling a relatively small handful of low-margin midrange and low-end chips. 2023 was a decent year for Arc, but 2024 may determine its future.

Power efficiency improves

Maybe the performance of 2023’s new GPUs has been underwhelming, but they have a few things going for them. The biggest is power efficiency—if you’re using a newer and more power-efficient manufacturing process, and you’re not using the extra headroom to boost performance, then you’ll mainly benefit from lower power use.Advertisement

Nvidia’s RTX 40-series cards have been the biggest beneficiaries here. Take the RTX 4070, which performs a lot like an RTX 3080 but uses about 60 percent as much power. An RTX 4060 is only 15 or 20 percent faster than an RTX 3060 most of the time, but it does that while using around two-thirds as much power. That can also help these GPUs run cooler, and we’ve even seen some good small-form factor and low-profile options as a result (though many GPU makers still seem committed to their gigantic triple-fan overkill coolers).

AMD’s RX 7000-series cards also improved on the power efficiency front, just not as dramatically as Nvidia’s. Either way, it’s a win.

Ask many PC gamers whether they prefer higher frame rates or better power efficiency, and most of them will probably choose frame rates. But as a longtime lover of tiny, space-constrained ITX desktop builds, I’m always happy to see more powerful GPUs that can fit in tighter spaces.

Export controls spike high-end prices

Most GPU buyers didn’t have to worry about stock issues or raising prices this year. But especially in the last few months, prices have been creeping back up for one GPU already infamous for its cost: the GeForce RTX 4090. The cheapest models on Newegg and Amazon currently in stock start at around $2,000, $400 more than the MSRP.

And it’s not that thousands of people are suddenly deciding that they want to buy a GPU that costs more than most people’s entire gaming PC. The main culprit may be new export controls—the US said that 4090s could no longer be sold in China starting in mid-November, part of a steadily escalating series of restrictions that has also caught up most of Nvidia’s server GPUs. This led to some alleged hoarding of 4090s by Chinese buyers, and Nvidia was said to rush as many 4090s as it could into the Chinese market before the ban went into effect, leaving fewer cards for everyone else (at least temporarily).

One reason for the 4090’s popularity in China is also related to export controls—Nvidia’s Tensor Core GPUs for AI servers are also restricted in China, which has prompted some Chinese factories to repackage 4090 GPUs as AI accelerators with dual-slot coolers that allow for higher density installations.

These price increases will hopefully be temporary as the market adjusts to the new export controls and supply and demand even back out. Nvidia is also said to be readying a mid-generation “Super” refresh of many 4080 and 4070-series GPUs, which could further scramble pricing for higher-end cards. Regardless, it’s not a great time to want a 4090.